AWS Networking Blueprints

In any organization with more than just one or two teams building infrastructure there needs to be a strategy for connectivity between applications. More specifically, you’re inevitably going to need to send traffic between different VPCs (‘Virtual Private Clouds’)

There are a number of potential approaches which can be adopted to do this, and each of these comes with its own set of trade-offs. In this post, I’ll be exploring the options, and providing enough context to help you decide which makes sense for you.

Everything in a single account and VPC

Can’t have inter-VPC networking problems if everything runs in a single VPC in a single account?

I wouldn’t recommend this approach, even for the smallest production workload. Using a single account means you have a single shared ‘blast radius’ - meaning you can break your production workload when you’re testing something in development.

At a minimum I’d suggest two accounts - one for development/testing, one

for production. If you’re building using infrastructure-as-code (and you should

be), you can use the same templates to build out the two accounts, and you can

have confidence that any change you make in the production environment has

already been tested first. I talk about this in more depth in the article How

many AWS accounts do you need?.

At a minimum I’d suggest two accounts - one for development/testing, one

for production. If you’re building using infrastructure-as-code (and you should

be), you can use the same templates to build out the two accounts, and you can

have confidence that any change you make in the production environment has

already been tested first. I talk about this in more depth in the article How

many AWS accounts do you need?.

If you don’t need any connectivity between your non-production and production environments, and you only have a single account for each, then you don’t need to worry too much about the solutions discussed in the rest of this article yet. Make sure you bookmark it for when you have more apps to add.

Everything in a shared VPC

With the introduction of ‘Resource Access Manager’ (RAM) in AWS, it’s possible to share VPCs between multiple accounts. This removes some of the concerns around blast radius, and makes billing attribution easy (for charge-back scenarios), but allows you to still have a single VPC to avoid complexity.

Doing things this way means that you can easily have centralized resources such as managed NAT gateways and VPN connections shared between all your accounts. You can also use security group references between applications, simplifying least-privilege access.

There are a couple of ways you might want to utilise this approach, and I’ve detailed them below.

Shared VPC and shared subnets

This is the simplest to implement. You have one VPC and a shared set of subnets. You can use RAM to share to all accounts under an organizational unit, making it possible to have a VPC and subnet shared to all production accounts, and another group for all non-production accounts with no code required.

One downside to this approach is that you are sharing subnets, which means if someone spins up a large number of instances or uses up many IP addresses in some other way (Lambda, Glue, etc) you could have a company wide outage.

This approach can also create problems if you have connectivity to resources outside of AWS - for example a VPN to Azure, or a Direct Connect back to a traditional data center. Because all applications share an address space it is not possible to allow traffic only from specific apps via ACLs or firewalls within the data centre. You may be able to mitigate this by using egress firewall rules in AWS, but this quickly becomes a classic example of complexity avoided in one area emerging as different complexity elsewhere.

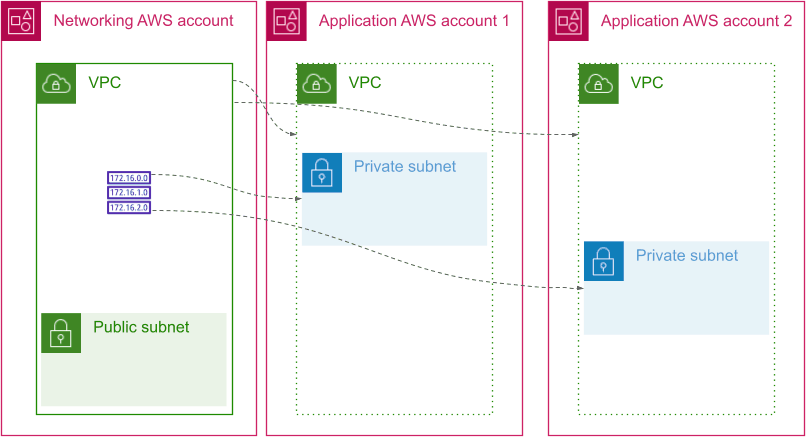

Shared VPC and per account subnets

It order to deal with the concerns listed above, you may instead choose to deploy a single shared VPC, but with subnets utilised only by a single account. This means IP space exhaustion problems cannot affect other applications, and IP address ranges can be used by firewalls outside AWS to more tightly scope access.

In order to work in this way, you’ll want to write some automation to provision subnets for new accounts. For smaller set ups, you could probably get away with a shared spread-sheet and some manual set up work.

General problems with the shared VPC approach

Using shared VPCs, either with or without shared subnets, has its challenges. Firstly, whilst blast radius is reduced, there are still AWS limits and quotas, both documented and undocumented, which apply to shared VPCs.

It is still possible for the quota for the number of Elastic Network Interfaces per VPC to be hit for example, preventing any account from starting new instances, or potentially invoking new Lambda functions.

It is strongly suggested that before embarking on this approach in a large environment have a discussion a solutions architect from AWS. Details of some of the undocumented limits which may be hit are at the time of writing only available under NDA.

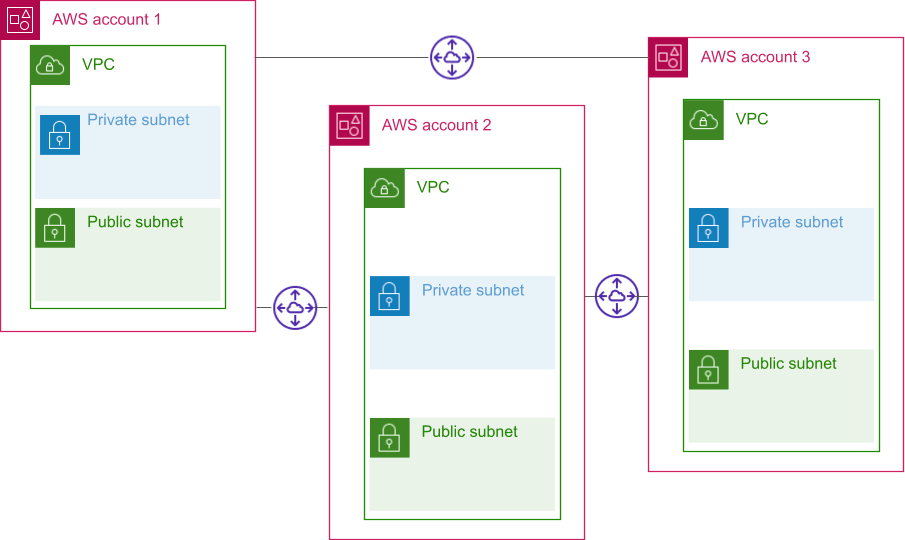

Separate VPCs with ad-hoc peering

For a long time, this was the only multi-VPC connectivity approach available. As a result, you’ll see many articles and examples talking about working in this way.

Unfortunately, there are a number of limitations with this approach. Firstly, because everything is point to point it quickly becomes messy and difficult to manage, and whilst it is possible to write automation which removes some of the pain here, it’s not necessarily straightforward to be able to answer what should be simple questions like “Can account A talk to account B?”.

Additionally, although poorly documented, peered VPCs share some AWS network limits. This is particularly problematic if you have one or more centralized accounts which all other accounts peer with. This means that peered VPCs become at least partially part of the same blast radius.

It is important to know that VPC peering connections cannot be used for intermediate routing - that means you cannot connect via a peered VPC to other resources. For example, if you have a VPN connection into VPC A, you cannot route from peered VPC B to that VPN connection via VPC A.

Whilst VPC peering itself costs nothing, it should be noted that this approach may add additional cost because you cannot re-use NAT gateways in the way that you can with some other approaches. As such, you should be careful when comparing costs against other options.

Separate VPCs with AWS PrivateLink Endpoints

Arguably the most secure approach, and for most use cases the most expensive, is to have no direct cross account/VPC connectivity. Instead, PrivateLink endpoints are used to expose only specific loadbalancers from other VPCs into the VPC where they will be accessed.

This configuration is highly secure because it requires a concious effort to expose resources to other accounts. This makes it difficult to inadvertently expose unnecessary services, making it hard for an attacker to gain lateral movement within your AWS environment. From a security perspective, one downside to this configuration is that you cannot make use of security group references to allow/deny traffic. For highly security oriented deployments, where each app runs in its own account and only API endpoints are exposed, this limitation should not be a major concern.

The extra cost comes from the 1c/hour per AZ charge per PrivateLink endpoint. This means that, assuming you are using three AZs, you need to pay 3c per hour per accessing VPC per service exposed. For services that need to be available from many accounts, this can add up. Additionally, as each account is separate, each will need to provision its own set of NAT gateways and VPN connections.

For those needing PCI compliance, it makes sense to run your PCI environment isolated with only PrivateLink exposing endpoints into and out of that set up as necessary. This can limit the scope of what is required for PCI auditing.

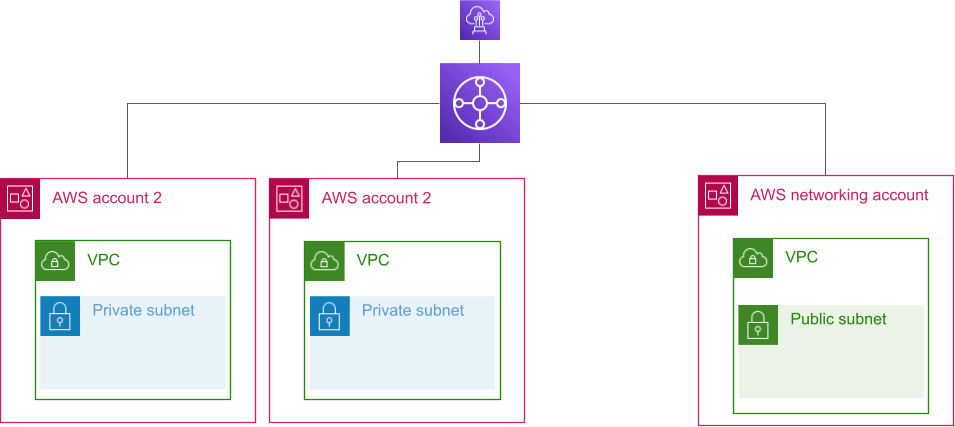

Separate VPCs with Transit Gateway

AWS Transit Gateway is the approach which will feel most familiar to those used to data center networking. It is essentially a BGP router which allows routing to, from and between VPCs, VPNs and AWS Direct Connect.

The only real limitation to note is that it is currently not possible to do security group referencing across Transit Gateway. This was mentioned as a roadmap item at the launch of Transit Gateway in 2018, but as of writing this article still isn’t available.

One factor to bear in mind is that every VPC you need to connect the Transit Gateway to needs one VPC endpoint per availability zone. These costs will potentially be offset by the removal of the need to have a NAT Gateway in every VPC.

Transit Gateway allows you to share NAT gateways, VPN connections and Direct Connect between all of your endpoints. With Transit Gateway peering, you can create cross-Region routing in a relatively simple manner. For any organization with more than a couple of dozen accounts Transit Gateway is an attractive solution.

Mix and Match

The great thing when choosing the right direction is that you don’t need to pick on option and stick with it - it’s possible to simultaneously utilize multiple approaches. You could for example choose to use isolated VPCs with PrivateLink Endpoints exposed into them for accounts which handle your most critical data, and a shared VPC for the rest.

Key Points to Remember

- VPCs have both declared and undeclared limits/quotas.

- Shared VPCs means sharing these limits

- Peering VPCs often means sharing these limits too

- It’s OK to mix and match approaches - for largely environments it is definitely desirable to.

Suggested Approach

If you’re starting out with zero AWS footprint, I would suggest the shared VPC with per account subnets approach is a good way to start. Once you’ve dealt with the initial hurdle of automating subnet creating and allocation, it gives you a configuration that is comparatively simple to manage and allows you to easily share resources such as NAT gateways and VPN connections.

The reality for most readers of this post is likely to be that there is already some level of AWS usage, and it is questionable whether the benefit it offers is sufficient to deal with the pain of rebuilding applications in a new VPC. In these situations, Transit Gateway is a great option to get to a position where you can have consistent cross-VPC connectivity, and re-use common resources such as NAT Gateways, VPN connections and Direct Connect.

Transit Gateway quickly makes sense as AWS VPC count increases, and is something most organizations will likely adopt at some point. Using it allows the use of multiple approaches simultaneously in a manageable fashion. Because moving applications to new VPCs is often a significant undertaking, even in an environment where everything is created via infrastructure-as-code, it is almost inevitable that this is where most will end up.